- ABP Framework version: v9.0.2

- UI Type: Angular

- Database System: EF Core (PostgreSQL)

- Tiered (for MVC) or Auth Server Separated (for Angular): yes

Hello,

I am trying to intercept my service from my aks cluster. Everything works fine, i can intercept the call and redirect it to my local machine.

but when i debug the app from visual studio or jetbrains, environment variables are not overridden it gets the appsettings.json values. How does abp studio overriding the environment variables that is the same with the service? am i doing sth wrong? thank you for the help.

8 Answer(s)

-

0

-

0

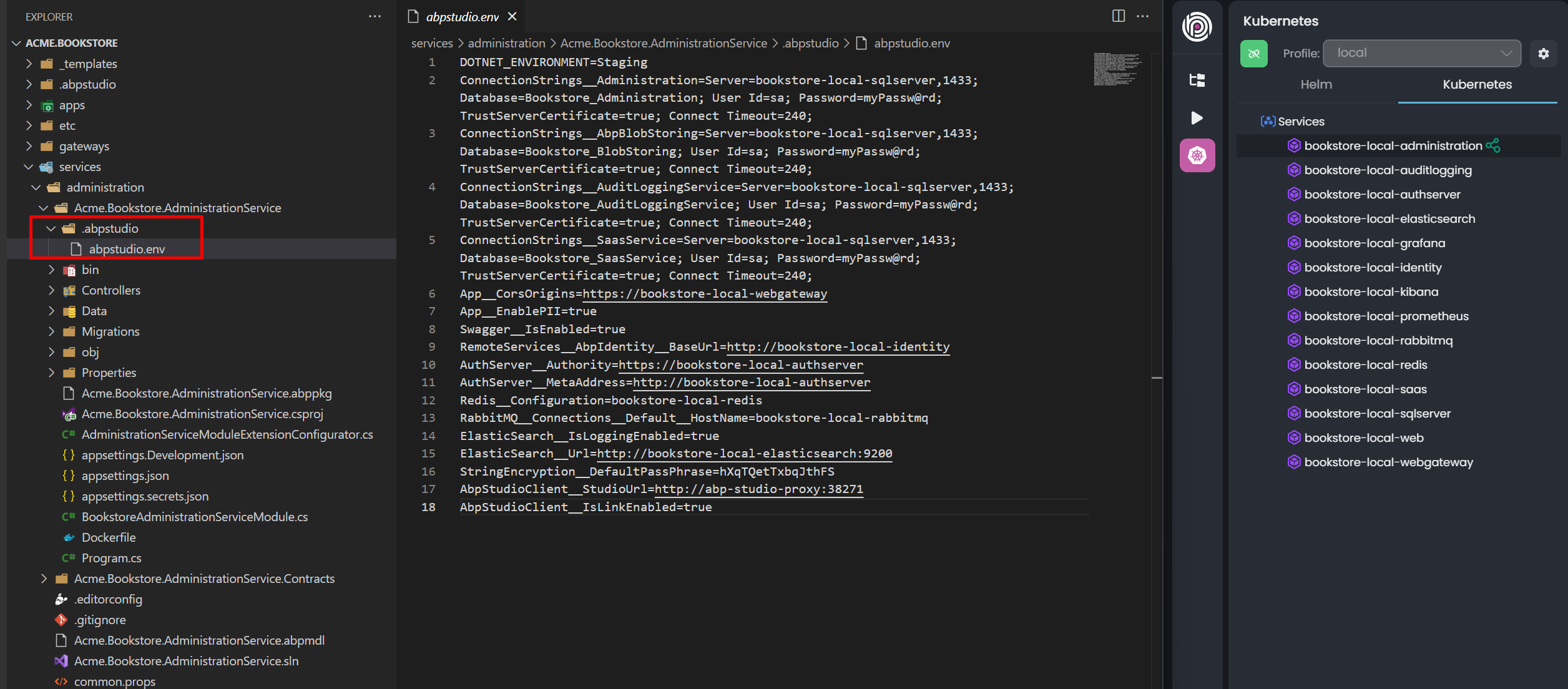

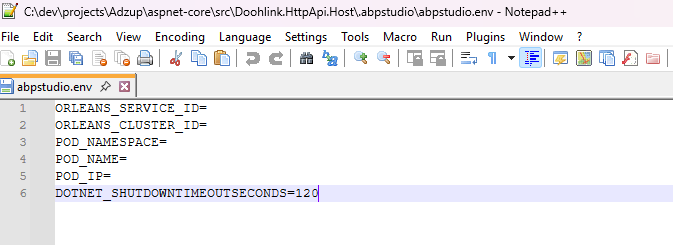

Hello, Yes i have the file inside the folder when i intercept. But the environment variables inside the file are not reflecting the environment variables inside my pod. I use Microsoft Orleans with my abp project. only orleans env variables are in it.

my application that runs on the cluster is abp 8.3 app. So in production Volo.Abp.Studio.Client.AspNetCore nuget package do not exists.(cause i am on the upgrading phase to .net 9.0, i didn't use abp studio before, planning to include it now) How does these env variables inside this file is injected into my app when i debug? is this nuget package looking at this file and changing the env variables when i start my app on my local? Even with that i couldn't understand why abpstudio.env file do not include the env variables that is running on my pod.

-

0

-

0

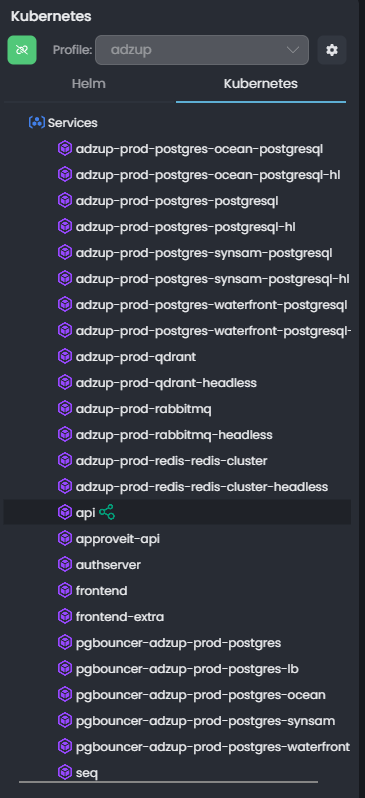

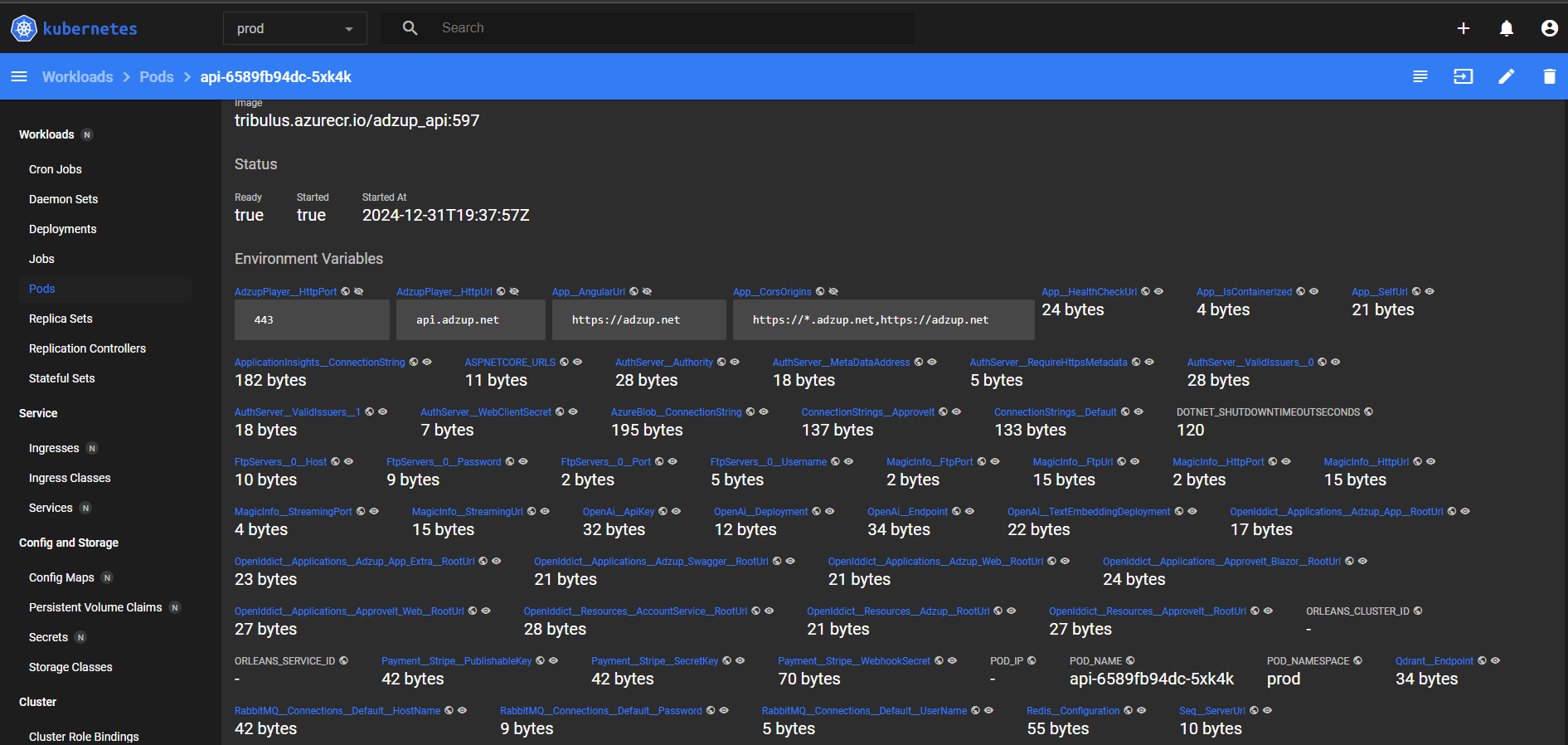

Hello again, I have already had the environment variables in my pod the thing is when i intercept, it does not reflect to .env file that is generated by abpstudio. here is a snapshot that i take from dashboard from kubernetes.

so i believe sth is wrong with transferring the env variables.

and can you also explain how these env variables has been injected when you debug from your local? Is Volo.Abp.Studio.Client.AspNetCore package is responsible for that?

-

0

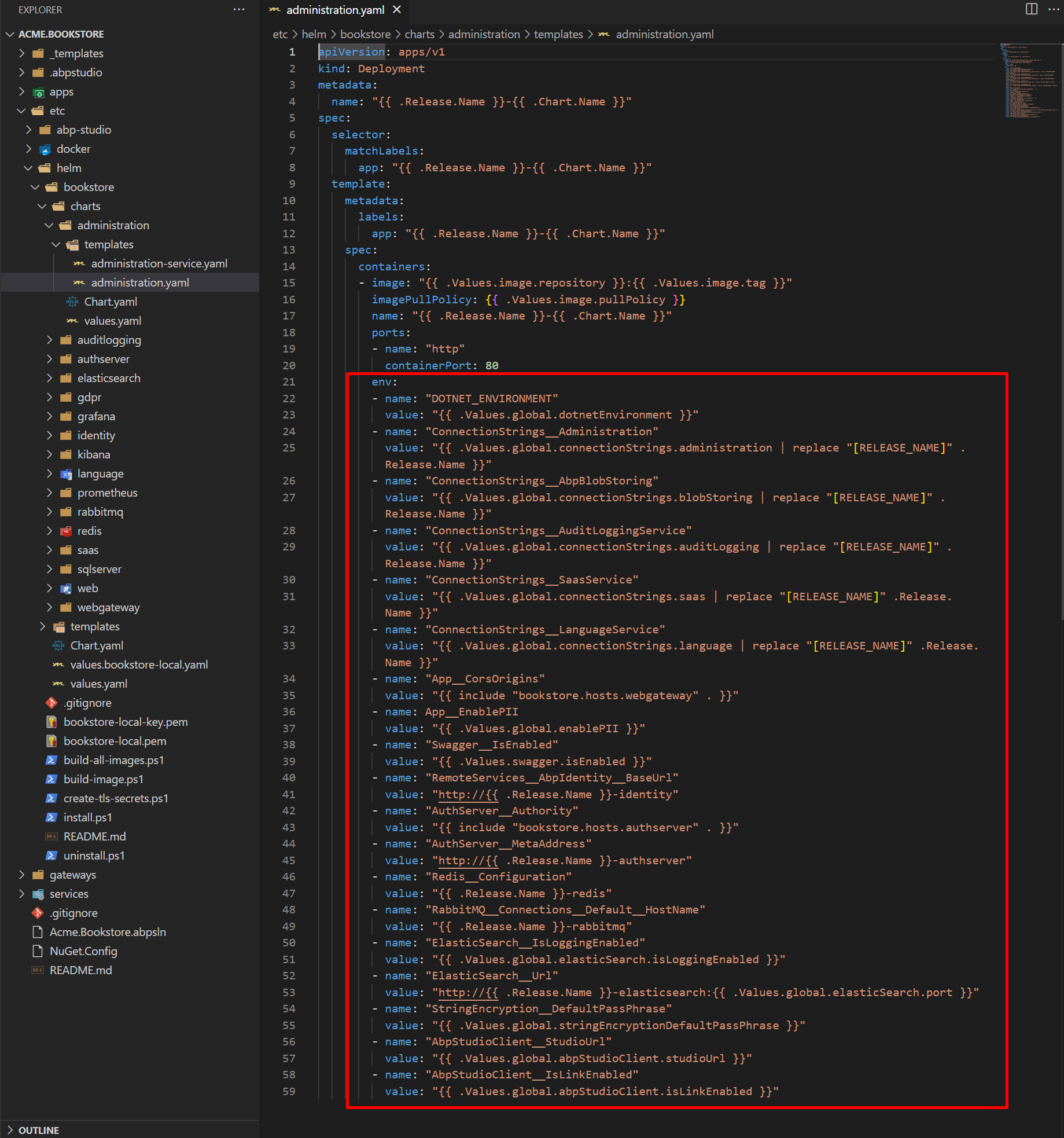

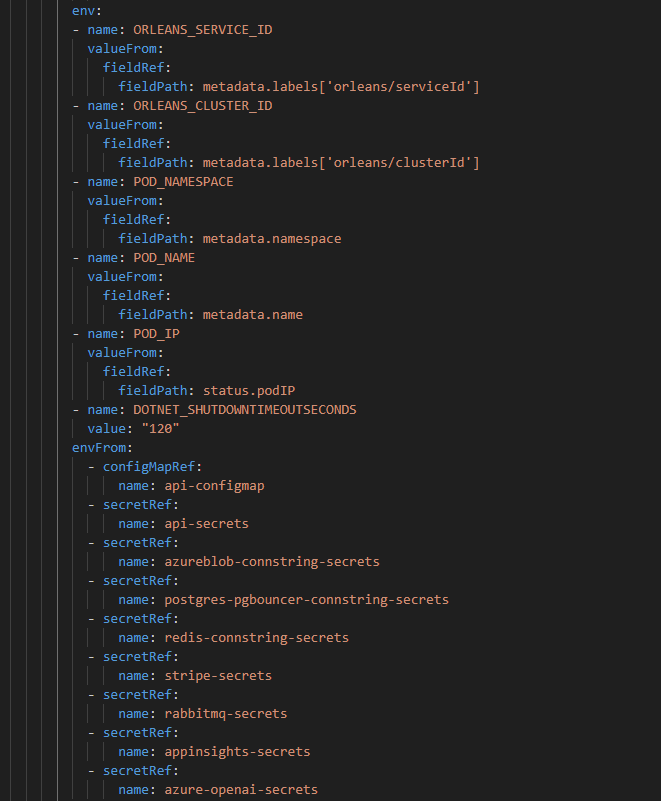

ok I think I kind of understand what the problem is. My deployment is sth like this.

as you can see if the env variables comes from configMapRef or secretMapRef then the problem occurs. I believe the code that reads the env variables are only looking at the deployment file yaml and try to take it from there. It does not actually looking at the pod itself. Am i right? I can not change the deployment file since i prepared my whole cluster accordingly. Is there anything that i can do to fix it.

-

0

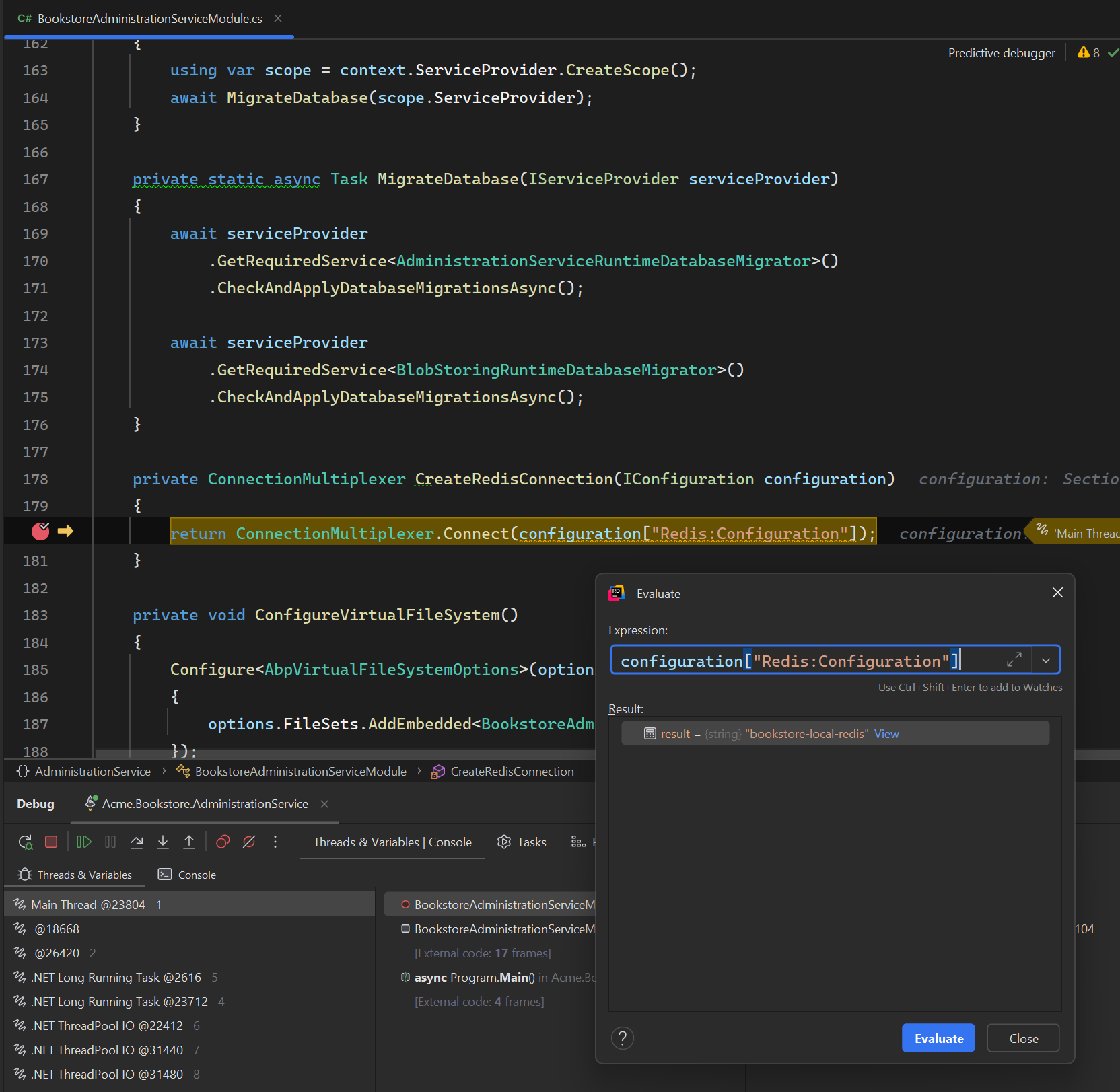

I am trying to make this work. So what i have done to fix the problem is. I wrote a power shell script that will get my env variables and after i intercept it i replaced it with the .env file. Here is the shell script that i have used.

param ( [string]$app, [string]$context ) # Change the context kubectl config use-context $context # Get the directory of the current script $scriptDir = Split-Path -Parent $MyInvocation.MyCommand.Definition # Get the pod name $podName = kubectl get pods -n prod -l app=$app -o jsonpath='{.items[0].metadata.name}' # Get the environment variables $envVars = kubectl exec -n prod $podName -- printenv # Define the output file path $outputFilePath = Join-Path -Path $scriptDir -ChildPath "abpstudio.env" # Write the environment variables to the output file $envVars | Out-File -FilePath $outputFilePaththen i added this line to my app start which wasn't there. That's why i couldn't get the env variables.

AbpStudioEnvironmentVariableLoader.Load();then the app is running like it should be with the env variables injected. My app port is 44389 in my local env. It runs on that port. So when i open up a browser and write https://localhost:44389 i can see my app running. also when i write https://host.docker.internal:44389 i can see my app running but when i try to reach my service from outside with domain name or internally like http://api i can not reach it and nginx throws an error like

I think this is the last part i need to figure it out. So can you explain it to me how the nginx is forwarded to my local computer. I know that there is a client docker container running in my machine and one server in my cluster. But it seems interception is not happening whenever i try to hit the nginx ingress.

-

0

Hi @cangunaydin

Does the problem continue?

-

0

Hello I figured it out @yekalkan. The problem was, I was debugging it as https instead of http. That’s why it didn’t hit the breakpoint. But it could be nice for abp studio to get the values from the pod instead of yaml file