We wanted to understand how audit logging is produced, so we decided to first override the AuditLogMiddleware from ABP and disable the write of the audit log info. If we then refresh the page and check auditlog we still see information being written to data base:

Could you let us know where and how these are logged to audit log? Or how they are bypassing the middleware? Can we disable audit logging for only API controllers? Do we need to override the Interceptor too?

Hi liangshiwei,

Sometimes rubber ducking works in mysterious ways.

We found out on Azure that we are using Deployment slots, so what happened is that after a successful deployment "Slot B" is receiving 0% of traffic and "Slot A" is getting 100% of traffic. However, B is still running, meaning that the BackgroundWorker is also still running.

This seems like a very plausible explanation of how certain data was being 'hijacked' by a ghost process. We don't know why the messages are immediately flagged as abandoned by that instance, we cannot access its logging, but at the same time we kinda don't care. So we now deleted that deployment slot and will monitor the table again, but we're confident this should solve our mystery!

Thanks for your patience and time to troubleshoot with us.

Hi,

Only

BackgroundJobWorkeris operating theAbpBackgroundJobstable https://github.com/abpframework/abp/blob/rel-8.0/framework/src/Volo.Abp.BackgroundJobs/Volo/Abp/BackgroundJobs/BackgroundJobWorker.cs#L42-L112It will try to retry to execute the Job If an exception occurs. When the max retry count is reached, it will abandon the job without logging the exception https://github.com/abpframework/abp/blob/rel-8.0/framework/src/Volo.Abp.BackgroundJobs/Volo/Abp/BackgroundJobs/BackgroundJobWorker.cs#L82

I understand how the 'normal' process works, but what is happening is abnormal behavior. The reason I am mentioning that the exception is not being logged is because we specifically mentioned that we are using our own worker that has explicit logging which is not showing up. If the Abandoning were being done by our worker, it would have produced logging, it is not.

There is no other process running on the web server (app service) other than our ABP application (w3wp.exe).

The combination of RetryCount = 1 and IsAbandoned = 1 also indicates that the normal behavior is not being triggered, because otherwise it would have retried the job, but it does not. "It" immediately concludes that the job is unprocessable, which is indicated by the fact that those 100 jobs never reached the worker.

What modification can we make to ABP plumbing to see which process/code is sending the update statement?

We already added the MachineName to JobInfo to ensure that the production app service is the one adding the messages to the queue. We'd love to add more information to ExtraProperties, but while the Entity has this property, the Dto does not, so it won't be persisted after mapping.

We now have 7 unprocessed jobs in the table, setting them to IsAbandoned = 0, the logs show that our worker is immediately processing them and the table is empty. So 'something' is preventing from those messages ever reaching our worker.

Yes, not being able to reproduce this behavior, you could immediately conclude that you cannot do anything. However, you wrote the entire infrastructure and plumbing of the background component and related functionality, so we ask you to just please help us figuring out what is happening, why it is happening and how this hopefully can be addressed, as we are hard stuck.

In order to do our part, I will explain what we did and perhaps we can figure out together why this behavior is happening. Also, judging by the previous threads created on similar issues related to IsAbandoned being set without any logging output, it could perhaps be something nested very deep within ABP. Without any logging output from the underlying components it's incredibly hard to debug this. So here is what we did/tried (and yes perhaps we did it terribly wrong, but we are not ABP experts...yet):

Configure<AbpBackgroundJobOptions>(options =>

{

options.IsJobExecutionEnabled = false; // Let's assume the configuration returned false

options.AddJob<SendGridMailerJob>(); // This processes the emails needing to be send by SendGrid

options.AddJob<UpdateTrackServiceJob>(); // This processes 'Tracking' information that needs to be forwarded to an external API

});

Justification: We need the ability to completely disable background job processing. One major factor here is being able to process the jobs locally with debugging enabled in order to verify or troubleshoot in case we see anything going wrong on Production. The assumption is that at this moment "we/our code" is the only process that is working on the (pending) background jobs.

Behavior: Jobs are set to IsAbandoned = true no logging is produced, no exceptions are seemingly captured. Enabling output logging on our DbContext also shows no SQL responsible for performing the UPDATE, yet refreshing the table you can see that RetryCount = 1 and IsAbandoned = true.

Analysis: When an exception does occur, we can immediately see output logging, and the job is retried several tries before IsAbandoned = true is set. However on "these special occasions" it is set immediately after the first try. NextTryTime is not adjusted and the RetryCount is never increased afterwards.

Hypothesis: Are there other DbContext at play that are querying and writing to the **AbpBackgroundJobs** table? How can we verify this? What could we overwrite or configure to visualize this behavior with logging?

Conclusion: We are stumped, we cannot explain this behavior. Luckily the source code is on GitHub, so we figured it is time to start overriding some code or classes to see if we can learn anything about background behavior in ABP.

This is being called in PostConfigureServices in our own module, which we believe is loaded and processed last in the chain. We are highly skeptical about having this code here, but we also are unable to verify if ReplaceServices and/or ReplaceContext is enough.

context.Services.RemoveAll[IBackgroundWorker]();

context.Services.RemoveAll[IBackgroundJobWorker]();

context.Services.RemoveAll[IBackgroundJobManager]();

context.Services.RemoveAll[BackgroundJobWorker]();

context.Services.RemoveAll[BackgroundEmailSendingJob]();

context.Services.RemoveAll[NullBackgroundJobManager]();

context.Services.RemoveAll[DefaultBackgroundJobManager]();

Justification: We want to intercept all the calls being made so we can add logging output and observe behavior about background jobs being picked up. We also want to make sure that "our" worker is the only process actually processing background jobs (see next section).

Behavior: Jobs are still set to IsAbandoned = true and again no logging is produced when this occurs.

Conclusion: We need to implement our own BackgroundWorker and add more logging to understand what is happening.

We created our own AcmeBackgroundWorker class and copied the BackgroundJobWorker class line by line to ensure we are compliant and also because I am not going to pretend to understand every bit of code in there (we do, but that's not the point). We've extended the ExecuteAsync method and added verbose logging.

This is how the class is currently defined:

[Dependency(ReplaceServices = true)]

public sealed class AcmeBackgroundWorker : AsyncPeriodicBackgroundWorkerBase, IBackgroundJobWorker, IBackgroundWorker

{

// Implementation

}

// In the same 'PostConfigureServices' block as shown above at the end we then added

context.Services.AddTransient<IBackgroundWorker, AcmeBackgroundWorker>(); // Not sure if both is needed but we saw both in the ServiceCollection

context.Services.AddTransient<IBackgroundJobWorker, AcmeBackgroundWorker>(); // Not sure if both is needed but we saw both in the ServiceCollection

context.Services.AddTransient<IBackgroundJobManager, DefaultBackgroundJobManager>(); // This just queues the messages, so can be restored originally

Justification: We intially thought that the ReplaceServices would be enough to override everything, but since the jobs are still being processed by a ghost worker, we left it despite the fact we are already manually calling RemoveAll.

These are the parts were we added our own logging just to see what is happening, both when everything goes right, but especially when things go wrong (i.e. an Exception is thrown).

var pendingJobs = await _acmeService.GetPendingBackgroundJobsAsync();

// Let's output how many jobs are pending

Logger.LogInformation("[AcmeBackgroundWorker] Retrieved '{jobCount}' pending background job(s).", pendingJobs.Count);

if (pendingJobs.IsNullOrEmpty()) return;

// <snip>

// We will log which Job is being called to process the message

Logger.LogInformation("[AcmeBackgroundWorker] Executing {jobType}.ExecuteAsync()...", currentJob.JobName);

// <Execute the registered Job instance to process the job>

// We will indicate when all is successful and thus the job will be removed from the table

Logger.LogInformation("[AcmeBackgroundWorker] Deleting successfully executed '{jobType}' job.", currentJob.JobName);

// <snip>

// This is code where the Exceptions are 'caught' and IsAbandoned is set to 'true' by the Worker (2 occurences)

Logger.LogWarning("[AcmeBackgroundWorker] Setting IsAbandoned = true for '{jobType}'.", currentJob.JobName);

Justification: We used LogInformation as we want to be able to see these messages on Production, normally this would be regarded as debug output. We also added these specific statements, almost to the point of over completeness, because we really wanted to have this verbose output whenever a job is being processed.

Behavior: When it "works normally" and if an exception is thrown logging output is shown as expected. But when the ghost worker has done something no output is produced, breakpoints inside our worker are never hit, but the result is still that IsAbandoned = true is written to the DB. Meanwhile in the logs we can see the following:

2024-04-10 11:59:43.856 +02:00 [INF] [AcmeBackgroundWorker] Retrieved '0' pending background job(s).

2024-04-10 11:59:48.869 +02:00 [INF] [AcmeBackgroundWorker] Retrieved '0' pending background job(s).

According to our worker instance there were no pending jobs, even when we set the BackgroundJob to IsAbandoned = 0 using SQL directly. When doing a SELECT right after the UPDATE we can see that IsAbandoned is 0. No breakpoints are triggered, and doing a SELECT right after shows that IsAbandoned is set to 1. All the while the logs are only showing our own custom added logging output, ABP by itself produces zero logging when background job processing seemingly fails.

Conclusion: We are completely flabbergasted what is processing the AbpBackgroundJobs table, considering we believe that we eliminated "everything" from the pipeline?

We currently have 272 failed BackgroundJobs stored in the database. So we figured, why not execute a SQL script that will effectively reset all the jobs back to their initial state so they can be reprocessed by our worker.

The SQL we executed is as follows:

UPDATE [AbpBackgroundJobs]

SET LastTryTime = NULL, TryCount = 0, NextTryTime = GETDATE(), IsAbandoned = 0

272 row(s) affected

Then we wait and observe what the logfiles are telling us:

2024-04-10 13:25:08.255 +02:00 [INF] [AcmeBackgroundWorker] Retrieved '172' pending background job(s).

If we then look in the database we can see that exactly 100 BackgroundJob rows are IsAbandoned = true with RetryCount = 1 and these records thus never made it to our AcmeBackgroundWorker. So what has picked up this data? Why were they flagged as unprocesable?

We repeated this process several times and each time only a portion of background jobs is processed, the rest is abandoned. After about 4 retries the queue is now fully depleted and all messages are seemingly processed, resulting in an empty AbpBackgroundJobs table.

What else can we do? Override the store? Create our own tables and completely disable ABP Background Job feature? We also did a ReplaceContext for the BackgroundJob module, but still we cannot see any SQL output in the console that involves doing an UPDATE on that table. Perhaps because we set that up wrong and our understanding of ABP plumbing is too underdeveloped at this stage.

I sincerely hope you are able to share some thoughts and insights to provide a direction for the next steps we can do to try to figure this out. We are probably going to make a small management page to see the current abandoned jobs and add a feature to retry them, so we can at least process the 'deadletter' queue this way, but we are still completely puzzled what part of ABP is querying this table, despite us believing that only our worker should be handling this table.

@liangshiwei thank you for your initial analysis. Is there any underlying explanation why this particular project is affected by it? We started a complete new project using ABP Suite 5.2 and even without the DisplayAttribute this project is fast and responsive.

@liangshiwei, what mail address can I forward the details to? Thanks.

Hi,

We have been integrating ABP Commercial Business into our new project and so far it has been a good experience. Getting our domain and entity models added, we initially opted for using Suite to generate the UI components, but considering and realizing the more unique requirements, eventually settled on adding them manually after studying the generated code.

We opted to move these requirements to their own module where appropriate, and together with two additional modules, Metronic 8 and Multilingual, we have been able to get the authentication and dashboard integration working fine with our data models. The pages are not crazy fast, but consistent, and the 'user experience' is fine considering the current stages of development. It took some getting used to, to figure out where everything should go, especially with the localization and modules, but we feel we are at a good state none the less.

However, we came to the point where we wanted to try and figure out the 'modal' experience, which is also part of the default generated website and the pages generated with Suite. We generated the CRUD experience for 1 particular model, studied the generated code, removed what we didn't need, and added what we did need (mostly Metronic styling). The auto-generated HTTP/API and proxy classes gave an additional hurdle to fully understand the minimal requirements to get it all working together, but we did and the result was good, visually.

We decided on a more traditional approach for the models, whereas the 'index' and 'list' pages are using Knockout JS, but we are using razor pages for loading the modal content. These modals have been using the generated suite ABP tag helpers where applicable and regular (Microsoft) tag-helpers to get the desired output. We do have some script bound to provide an auto-complete experience, but it is with the form rendering where we are experiencing the first serious slowdown.

Loading the 'Create' modal of the model, that is showing your typical "Name, Address" fields takes 3-4 seconds to load. We double checked the API call and verified that it completed in 60ms, so it's not the ABP backend causing a slow-down. Since it is a Create modal, there are no additional DB queries involved, in fact we removed everything that could potentially slow it down, returning an empty new FooViewModel() as the result, but still the dialog loaded extremely slow.

However, this did sped up the initial experience (not having to load all navigation properties, like list of companies, list of users) it did not speed up the modal loading part. We analyzed all we could think of, removing tag-helpers, removing the modal body, enabling trace logging, double-checking no EF queries are being fired. Perhaps slow scripts that could prevent the dialog from showing, but nothing explained the slow-down. We installed AppInsights, but no additional insights into what caused the slow-down. Logging output also revealed no additional information as there are simply 2-3 lines visible where it matches the route and then 3-4secs later an indication that the content is being shown.

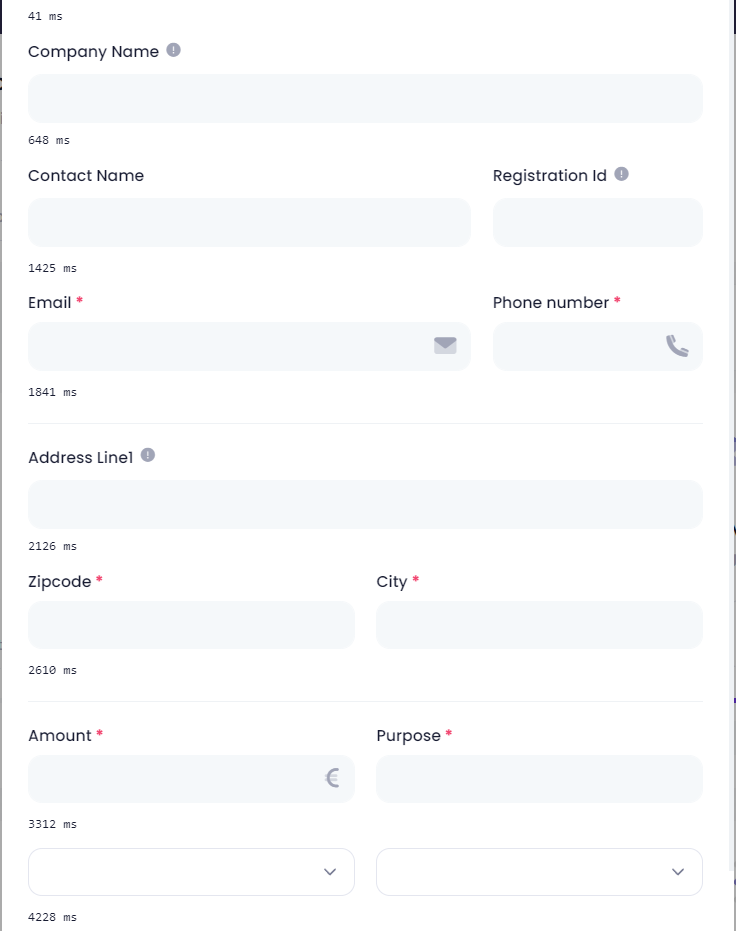

We then started to time the entire call, which is divided as follows:

We checked with F12 in Chrome/Edge to verify that the website was waiting for the server to render the modal, we also verified this by calling just the 'create-modal' route, and that is taking almost 4 seconds to render. We then removed all form/tag helpers on the modal, and it renders in ~100ms. Then every single form field, using a tag-helper adds 200-400ms delay, and as mentioned earlier, the two dropdowns containing localized Enum values, take upwards to 500ms each.

Checking the Network tab in Chrome reveals the total load time of the modal itself:

We then added a Stopwatch on the Razor page to show the rendering times of each control:

We tried quite a lot of things to determine what could, or should not be part of the issue:

@Html.DisplayFor and @Html.Input, dialog takes 200ms

We've partially executed and considered the following alternative approaches:

I realize that one of your first automated replies would probably be: Can you get us an isolated MVP project to showcase the issue? Well sadly, I cannot, our project is currently the smallest application to reproduce the problem.

However, we are more than willing to:

Any help and direction at this point will be appreciated, as we simply cannot figure out why the tag-helpers and localization are causing a slowdown. Did we perhaps make a fatal configuration error with regards to modules and inheritance? Is there another option to enable diagnostic logging to figure out what the "server" is doing for ~4secs?

We've banged our heads against this wall for about a full sprint now (2 weeks) and have reached a point where we need a solution, either hopefully with the expert assistance of your team, or by reaching a different conclusion on how to proceed further.

Thank you very much for your time and effort reading this very lengthy question.

@albert

You misread my comment completely. I am showing you the before and after from using RC and then Stable. It changes the make-up of the csproj files by adding modules directly as NuGet packages instead of project references.

Adding a module to a freshly generated website using ABP Suite v5.0.0 (Stable) yields the following error:

Error occurred while adding the module "Acme.Abp.Crm" to the solution "AcmeProject"...

Could not find a class derived from AbpModule in the project '<path-to-project>/<project-name>.csproj'.

This while there is a fully functional project and the AcmeAbpModule.cs file is located in the root of that location and is inheriting the AbpModule class, as it is a completely unmodified new project created by ABP Suite.

In addition, despite the error, the module is created in the .\modules\Acme.Abo.Crm location, and the csproj file is modified to load it. However, upon inspecting the file, it assumes that the project can be referenced as a NuGet package with version 5.0.0 as the assumed version?

In ABP Suite 5.0.0-rc.2

<ProjectReference Include="..\..\modules\Acme.Abp.Crm\src\Acme.Abp.Crm.Web\Acme.Abp.Crm.Web.csproj" />

In ABP Suite 5.0.0 (Stable)

<PackageReference Include="Acme.Abp.Crm.Web" Version="5.0.0" />

This breaks all the generated code in the project and logically it will not compile anymore. What am I missing here? Maybe it's time for a reboot..

Hi @albert,

After updating Suite to RC2 I get this error:

[12:00:50 ERR] Connection id "0HMDR0CIU98V0", Request id "0HMDR0CIU98V0:00000002": An unhandled exception was thrown by the application. Volo.Abp.AbpException: Could not find the bundle file '/libs/abp/core/abp.css' for the bundle 'Lepton.Global'!

Seems the wwwroot folder is missing in the %USERPROFILE%\.dotnet\tools\.store\volo.abp.suite\5.0.0-rc.2\volo.abp.suite\5.0.0-rc.2\tools\net6.0\any folder? When I created that folder and copied the files from the %USERPROFILE%\.dotnet\tools\.store\volo.abp.suite\5.0.0-rc.2\volo.abp.suite\5.0.0-rc.2\staticwebassets\ to that location, it started up and was usable again.

So either the staticwebassets are not being found, or the wwwroot is missing in the publish? (assumptions)

Thanks.