Activities of "kfrancis@clinicalsupportsystems.com"

I would actually think that no matter what, all job ids should fit in the field - something should be applying that, in the adapter for background jobs/workers.

Yes, and that's what we do with our own jobs - this job however is part of an internal that we don't control so I can't shorten it.

I'm using abp+hangfire using SQL, but it looks like there's an issue related to the RecurringJobId length and the general constraint that Hangfire has on the Key column (nvarchar 100).

- ABP Framework version: v8.2

- UI Type: MVC

- Database System: EF Core (SQL Server)

- Tiered (for MVC) or Auth Server Separated (for Angular): yes, tiered

- Exception message and full stack trace: (below)

- Steps to reproduce the issue:

[2024-07-04 15:21:31.520] [Fatal] DEV1-PC () <> Host terminated unexpectedly! Error: "An error occurred during the initialize Volo.Abp.Modularity.OnApplicationInitializationModuleLifecycleContributor phase of the module Volo.Abp.Identity.AbpIdentityProDomainModule, Volo.Abp.Identity.Pro.Domain, Version=8.2.0.0, Culture=neutral, PublicKeyToken=null: String or binary data would be truncated in table 'CabMD15.HangFire.Hash', column 'Key'. Truncated value: 'recurring-job:HangfirePeriodicBackgroundWorkerAdapter<IdentitySessionCleanupBackgroundWorker>.DoWork'.. See the inner exception for details." Volo.Abp.AbpInitializationException: An error occurred during the initialize Volo.Abp.Modularity.OnApplicationInitializationModuleLifecycleContributor phase of the module Volo.Abp.Identity.AbpIdentityProDomainModule, Volo.Abp.Identity.Pro.Domain, Version=8.2.0.0, Culture=neutral, PublicKeyToken=null: String or binary data would be truncated in table 'CabMD15.HangFire.Hash', column 'Key'. Truncated value: 'recurring-job:HangfirePeriodicBackgroundWorkerAdapter<IdentitySessionCleanupBackgroundWorker>.DoWork'.. See the inner exception for details. ---> Microsoft.Data.SqlClient.SqlException (0x80131904): String or binary data would be truncated in table 'CabMD15.HangFire.Hash', column 'Key'. Truncated value: 'recurring-job:HangfirePeriodicBackgroundWorkerAdapter<IdentitySessionCleanupBackgroundWorker>.DoWork'. at void Microsoft.Data.SqlClient.SqlConnection.OnError(SqlException exception, bool breakConnection, Action<Action> wrapCloseInAction) at void Microsoft.Data.SqlClient.SqlInternalConnection.OnError(SqlException exception, bool breakConnection, Action<Action> wrapCloseInAction) at void Microsoft.Data.SqlClient.TdsParser.ThrowExceptionAndWarning(TdsParserStateObject stateObj, bool callerHasConnectionLock, bool asyncClose) at bool Microsoft.Data.SqlClient.TdsParser.TryRun(RunBehavior runBehavior, SqlCommand cmdHandler, SqlDataReader dataStream, BulkCopySimpleResultSet bulkCopyHandler, TdsParserStateObject stateObj, out bool dataReady) at void Microsoft.Data.SqlClient.SqlCommand.FinishExecuteReader(SqlDataReader ds, RunBehavior runBehavior, string resetOptionsString, bool isInternal, bool forDescribeParameterEncryption, bool shouldCacheForAlwaysEncrypted) at SqlDataReader Microsoft.Data.SqlClient.SqlCommand.RunExecuteReaderTds(CommandBehavior cmdBehavior, RunBehavior runBehavior, bool returnStream, bool isAsync, int timeout, out Task task, bool asyncWrite, bool inRetry, SqlDataReader ds, bool describeParameterEncryptionRequest) at SqlDataReader Microsoft.Data.SqlClient.SqlCommand.RunExecuteReader(CommandBehavior cmdBehavior, RunBehavior runBehavior, bool returnStream, TaskCompletionSource<object> completion, int timeout, out Task task, out bool usedCache, bool asyncWrite, bool inRetry, string method) at Task Microsoft.Data.SqlClient.SqlCommand.InternalExecuteNonQuery(TaskCompletionSource<object> completion, bool sendToPipe, int timeout, out bool usedCache, bool asyncWrite, bool inRetry, string methodName) at int Microsoft.Data.SqlClient.SqlCommand.ExecuteNonQuery() at void Hangfire.SqlServer.SqlCommandBatch.ExecuteNonQuery() in C:/projects/hangfire-525/src/Hangfire.SqlServer/SqlCommandBatch.cs:line 122 at void Hangfire.SqlServer.SqlServerWriteOnlyTransaction.Commit()+(DbConnection connection, DbTransaction transaction) => { } in C:/projects/hangfire-525/src/Hangfire.SqlServer/SqlServerWriteOnlyTransaction.cs:line 100 at void Hangfire.SqlServer.SqlServerStorage.UseTransaction(DbConnection dedicatedConnection, Action<DbConnection, DbTransaction> action)+(DbConnection connection, DbTransaction transaction) => { } in C:/projects/hangfire-525/src/Hangfire.SqlServer/SqlServerStorage.cs:line 272 at void Hangfire.SqlServer.SqlServerStorage.UseTransaction(DbConnection dedicatedConnection, Action<DbConnection, DbTransaction> action)+(DbConnection connection) => { } in C:/projects/hangfire-525/src/Hangfire.SqlServer/SqlServerStorage.cs:line 319 at T Hangfire.SqlServer.SqlServerStorage.UseConnection<T>(DbConnection dedicatedConnection, Func<DbConnection, T> func) in C:/projects/hangfire-525/src/Hangfire.SqlServer/SqlServerStorage.cs:line 257 at T Hangfire.SqlServer.SqlServerStorage.UseTransaction<T>(DbConnection dedicatedConnection, Func<DbConnection, DbTransaction, T> func, IsolationLevel? isolationLevel) in C:/projects/hangfire-525/src/Hangfire.SqlServer/SqlServerStorage.cs:line 307 at void Hangfire.SqlServer.SqlServerStorage.UseTransaction(DbConnection dedicatedConnection, Action<DbConnection, DbTransaction> action) in C:/projects/hangfire-525/src/Hangfire.SqlServer/SqlServerStorage.cs:line 270 at void Hangfire.SqlServer.SqlServerWriteOnlyTransaction.Commit() in C:/projects/hangfire-525/src/Hangfire.SqlServer/SqlServerWriteOnlyTransaction.cs:line 69 at void Hangfire.RecurringJobManager.AddOrUpdate(string recurringJobId, Job job, string cronExpression, RecurringJobOptions options) in C:/projects/hangfire-525/src/Hangfire.Core/RecurringJobManager.cs:line 148 at void Hangfire.RecurringJobManagerExtensions.AddOrUpdate(IRecurringJobManager manager, string recurringJobId, Job job, string cronExpression, TimeZoneInfo timeZone, string queue) in C:/projects/hangfire-525/src/Hangfire.Core/RecurringJobManagerExtensions.cs:line 65 at void Hangfire.RecurringJob.AddOrUpdate(Expression<Func<Task>> methodCall, string cronExpression, TimeZoneInfo timeZone, string queue) in C:/projects/hangfire-525/src/Hangfire.Core/RecurringJob.cs:line 379 at async Task Volo.Abp.BackgroundWorkers.Hangfire.HangfireBackgroundWorkerManager.AddAsync(IBackgroundWorker worker, CancellationToken cancellationToken) at async Task Volo.Abp.Identity.AbpIdentityProDomainModule.OnApplicationInitializationAsync(ApplicationInitializationContext context) at void Nito.AsyncEx.Synchronous.TaskExtensions.WaitAndUnwrapException(Task task) at void Nito.AsyncEx.AsyncContext.Run(Action action)+(Task t) => { } at void Nito.AsyncEx.Synchronous.TaskExtensions.WaitAndUnwrapException(Task task) at void Nito.AsyncEx.AsyncContext.Run(Func<Task> action) at void Volo.Abp.Threading.AsyncHelper.RunSync(Func<Task> action) at void Volo.Abp.Identity.AbpIdentityProDomainModule.OnApplicationInitialization(ApplicationInitializationContext context) at void Volo.Abp.Modularity.OnApplicationInitializationModuleLifecycleContributor.Initialize(ApplicationInitializationContext context, IAbpModule module) at void Volo.Abp.Modularity.ModuleManager.InitializeModules(ApplicationInitializationContext context)

We're seeing an issue when multi-tenancy is enabled and when the tenancy picker has been removed (like as in https://community.abp.io/posts/hide-the-tenant-switch-of-the-login-page-4foaup7p), specifically when creating tenants and the tenant admin is created - the default is the same username making it difficult/impossible to use without having them login using email instead.

- ABP Framework version: v8.1.3

- UI Type: MVC

- Database System: EF Core (SQL Server)

- Tiered (for MVC) or Auth Server Separated (for Angular): yes, Tiered

To reproduce:

- Login as non-tenant admin

- Create tenant, specify email and password

- Logout

- Attempt to login using

admin(since that's the default username, which isn't obvious to the user creating the tenancy) and the password specified in #2

Expected: Should be able to login Actual: Can't login

Now, it does make sense but what would be nice is:

- Allow the user making the tenancy to create a unique username (since you already allow changing that in the tenancy "set password" modal.

- Add a

IdentityDataSeedContributor.AdminUsernamePropertyNameproperty so we can modify(Project)TenantDatabaseMigrationHandlerlike:

// Seed data

using (var uow = _unitOfWorkManager.Begin(requiresNew: false, isTransactional: true))

{

await _dataSeeder.SeedAsync(

new DataSeedContext(tenantId)

.WithProperty(IdentityDataSeedContributor.AdminUsernamePropertyName, adminUsername) // <-- NEW

.WithProperty(IdentityDataSeedContributor.AdminEmailPropertyName, adminEmail)

.WithProperty(IdentityDataSeedContributor.AdminPasswordPropertyName, adminPassword)

);

await uow.CompleteAsync();

}

Is that possible?

Working on adding plugin modules, for handling logic specific to clients and I started seeing background jobs stop working suddenly (reverting to NullBackgroundJobManager).

Working on creating a repro repo.

- ABP Framework version: v8.0.2

- UI Type: MVC

- Database System: EF Core (SQL Server)

- Tiered (for MVC) or Auth Server Separated (for Angular): yes

Without changes, commenting out the options.PlugInSources.AddFolder line causes it to start working correctly again (though without the module being registered). Here's the code in which commenting it out makes it work again:

await builder.AddApplicationAsync<CabMDWebModule>(options =>

{

// Hmm, works for now but what we want is a fixed location and if there are plugins, add them.

var currentDirectory = options.Services.GetHostingEnvironment().ContentRootPath;

var plugDllInPath = "";

for (var i = 0; i < 10; i++)

{

var parentDirectory = new DirectoryInfo(currentDirectory).Parent;

if (parentDirectory == null)

{

break;

}

if (parentDirectory.Name == "src")

{

#if DEBUG

plugDllInPath = Path.Combine(parentDirectory.FullName, "CabMD.ClientPlugIn.RMA", "bin", "Debug", "net8.0");

#else

plugDllInPath = Path.Combine(parentDirectory.FullName, "CabMD.ClientPlugIn.RMA", "bin", "Release", "net8.0");

#endif

break;

}

currentDirectory = parentDirectory.FullName;

}

if (Path.Exists(plugDllInPath))

{

// delete *.deps.json files before adding the folder as the dep file causes an error

foreach (var depFile in Directory.GetFiles(plugDllInPath, "*.deps.json"))

{

File.Delete(depFile);

}

options.PlugInSources.AddFolder(plugDllInPath);

}

});

hi

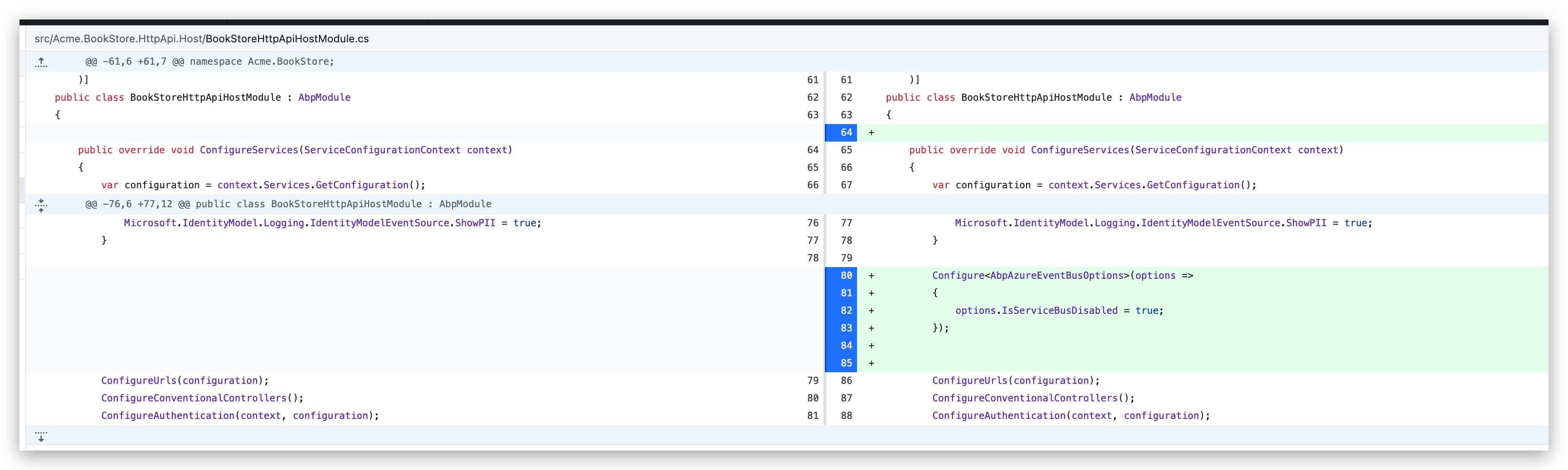

The solution is to set

IsServiceBusDisabled = truein HTTP API module.

Yes, that seems to have done the trick. At the same time, for whatever reason, we also had to produce new keys. For some reason the default key that's created when you create the service bus doesn't work while a new key (created to be the same) works. Even scoped keys work (scoped to a topic), they also work.

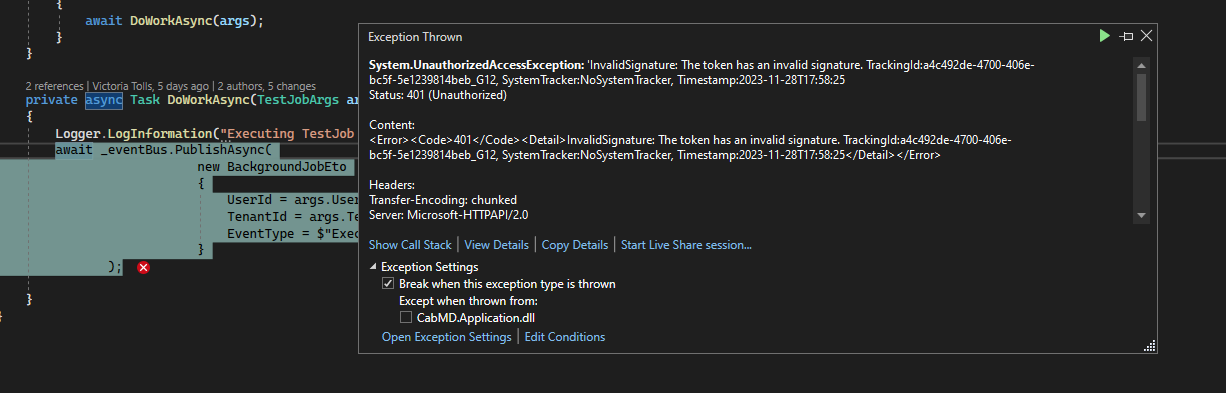

Also, the "manage" permission is required or this'll be what happens:

Yea, we understand that - that's by design. While we know the background job runs when it runs, the job itself pubs the distributed event - why doesn't the event get received half the time?

Ultimately, this is all just to notify a user when a job completes independent of the page they are on (as reporting jobs can take long) - if there's a better way, we're all ears - but there isn't AFAIK currently and this should work.

Will share with liming.ma@volosoft.com