- ABP Framework version: v4.4.2

- UI type: Angular

- DB provider: EF Core (Mysql)

- Tiered (MVC) or Identity Server Separated (Angular): yes

Context:

Currently we have the FileManagemente configured to upload large files (1 - 5 GB) to AWS S3 with the Volo.Abp.BlobStoring.Aws, and we have noticed that when uploading files of 300 MB or more to the abp server, the file is first load to memory / disk and then push to AWS S3.

This creates a bottleneck for us, since we constantly need to upload files of approximately 1 to 5 GB.

How can I configure FileManagemente to upload to S3 without having to wait for the entire file to be server-side?

- Steps to reproduce the issue:"

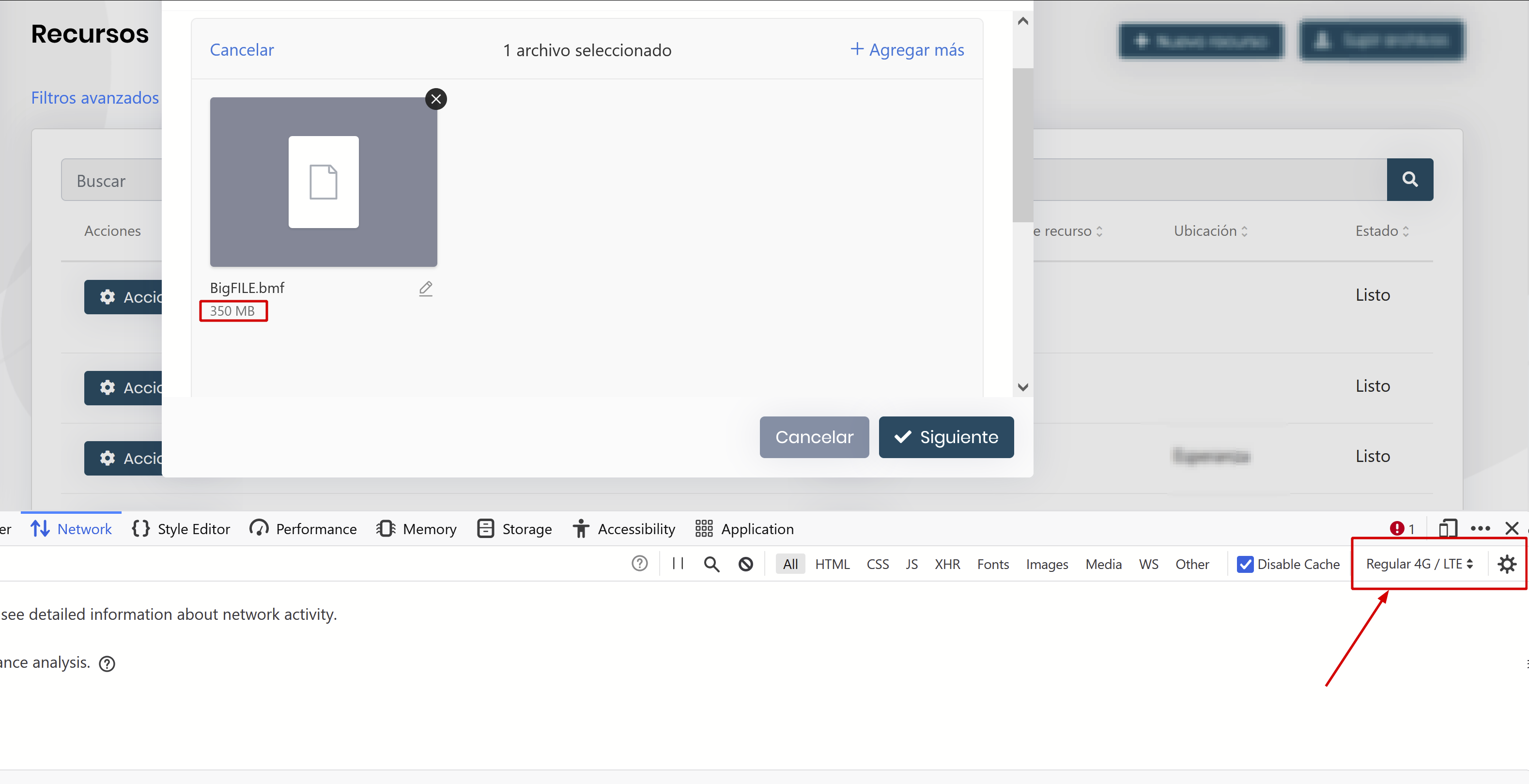

1.- If test in local environment, configure the network in the browser, as in the following image

2.- Apply traces in BlobStoring SaveAsync Function 3.- Upload the file

- Current Configurations

ApplicationModule:

Configure<AbpBlobStoringOptions>(options =>

{

options.Containers.ConfigureAll((containerName, containerConfiguration) =>

{

containerConfiguration.UseAws(aws =>

{

aws.AccessKeyId = accessKeyId;

aws.SecretAccessKey = secretAccessKey;

aws.Region = region;

aws.CreateContainerIfNotExists = false;

aws.ContainerName = bucket;

});

containerConfiguration.ProviderType = typeof(MyAwsBlobProvider);

containerConfiguration.IsMultiTenant = true;

});

});

Configure<AbpBlobStoringOptions>(options =>

{

options.Containers.ConfigureAll((containerName, containerConfiguration) =>

{

containerConfiguration.UseAws(aws =>

{

aws.AccessKeyId = accessKeyId;

aws.SecretAccessKey = secretAccessKey;

aws.Region = region;

aws.CreateContainerIfNotExists = false;

aws.ContainerName = bucket;

});

containerConfiguration.ProviderType = typeof(MyAwsBlobProvider);

containerConfiguration.IsMultiTenant = true;

});

});

Sample Class for trace the upload of the File:

using System;

using System.Threading.Tasks;

using Amazon.S3.Transfer;

using Microsoft.Extensions.Logging;

using Microsoft.Extensions.Logging.Abstractions;

using Volo.Abp.BlobStoring;

using Volo.Abp.BlobStoring.Aws;

namespace MyApp.Container

{

public class MyAwsBlobProvider : AwsBlobProvider

{

public ILogger<MyAwsBlobProvider> Logger { get; set; }

public MyAwsBlobProvider(

IAwsBlobNameCalculator awsBlobNameCalculator,

IAmazonS3ClientFactory amazonS3ClientFactory,

IBlobNormalizeNamingService blobNormalizeNamingService)

: base(awsBlobNameCalculator, amazonS3ClientFactory, blobNormalizeNamingService)

{

Logger = NullLogger<MyAwsBlobProvider>.Instance;

}

public override async Task SaveAsync(BlobProviderSaveArgs args)

{

var blobName = AwsBlobNameCalculator.Calculate(args);

var configuration = args.Configuration.GetAwsConfiguration();

var containerName = GetContainerName(args);

Logger.LogInformation($"[MyAwsBlobProvider::SaveAsync] FileName=${blobName} Bucket=${containerName}");

using (var amazonS3Client = await GetAmazonS3Client(args))

{

if (!args.OverrideExisting && await BlobExistsAsync(amazonS3Client, containerName, blobName))

{

throw new BlobAlreadyExistsException(

$"Saving BLOB '{args.BlobName}' does already exists in the container '{containerName}'! Set {nameof(args.OverrideExisting)} if it should be overwritten.");

}

if (configuration.CreateContainerIfNotExists)

{

await CreateContainerIfNotExists(amazonS3Client, containerName);

}

var fileTransferUtility = new TransferUtility(amazonS3Client);

// SOURCE: https://docs.aws.amazon.com/AmazonS3/latest/userguide/mpu-upload-object.html

var fileTransferUtilityRequest = new TransferUtilityUploadRequest

{

BucketName = containerName,

PartSize = 6 * (long)Math.Pow(2, 20), // 6 MB

Key = blobName,

InputStream = args.BlobStream,

AutoResetStreamPosition = true,

AutoCloseStream = true

};

fileTransferUtilityRequest.UploadProgressEvent += displayProgress;

await fileTransferUtility.UploadAsync(fileTransferUtilityRequest);

}

}

private void displayProgress(object sender, UploadProgressArgs args)

{

if (args.PercentDone % 10 == 0) {

Logger.LogInformation($"[MyAwsBlobProvider::SaveAsync] {args}");

}

}

}

}

Atte, Yolier

3 Answer(s)

-

0

Hi,

File management use the BLOB system, this is the BLOB system design,

You can custom the file management module to achieve upload files directly on the front end.

See: https://aws.amazon.com/blogs/compute/uploading-to-amazon-s3-directly-from-a-web-or-mobile-application/

-

0

Hello I think that the problem here is how ABP manage Streams. ABP FileManager uploads the entire file to the server before starting to upload it to S3, instead of passing chunks directly from the server to S3. Ideally ABP should take the parts and upload them without waiting for all the file to be on the server, in this way optimizing the server's resources. This is one of the many threads where this type of implementation issue is discussed. (In this case with NodeJS) Hope the ABP team can integrate it for future releases. https://stackoverflow.com/questions/21657700/s3-file-upload-stream-using-node-js

Best Moises

-

0

Hi,

As I said : https://support.abp.io/QA/Questions/1919#answer-760eb784-bece-e6d0-61f6-39ff469e0c79

This is by BLOB system design, it requires uploading the file stream to the backend.

How can I configure FileManagemente to upload to S3 without having to wait for the entire file to be server-side?

This is no such configure to enable frond-end upload directly, you need to implement it yourself.